CPSC 330 Lecture 11: Midterm review

Andrew Roth (Slides adapted from Varada Kolhatkar and Firas Moosvi)

Announcements

- Midterm 1 this week!

- Important information about midterm 1

- https://piazza.com/class/m4ujp0s4xgm5o5/post/204

- Good news for you: You’ll have access to our course notes in the midterm!

- Practice midterm questions available on PL

- You can see an example of all the info you will have access to in the last question.

iClicker Exercise 10.1

iClicker cloud join link: https://join.iclicker.com/HTRZ

Select all of the following statements which are TRUE.

- Price per square foot would be a good feature to add in our

X.

- Price per square foot would be a good feature to add in our

- The

alphahyperparameter ofRidgehas similar interpretation ofChyperparameter ofLogisticRegression; higheralphameans more complex model.

- The

- In

Ridge, smaller alpha means bigger coefficients whereas bigger alpha means smaller coefficients.

- In

iClicker Exercise 10.2

iClicker cloud join link: https://join.iclicker.com/HTRZ

Select all of the following statements which are TRUE.

- We can use still use precision and recall for regression problems but now we have other metrics we can use as well.

- In

sklearnfor regression problems, usingr2_score()and.score()(with default values) will produce the same results.

- In

- RMSE is always going to be non-negative.

- MSE does not directly provide the information about whether the model is underpredicting or overpredicting.

- We can pass multiple scoring metrics to

GridSearchCVorRandomizedSearchCVfor regression as well as classification problems.

- We can pass multiple scoring metrics to

Ridge and RidgeCV

- Ridge Regression:

alphahyperparameter controls model complexity. - RidgeCV: Ridge regression with built-in cross-validation to find the optimal

alpha.

alpha hyperparameter

- Role of

alpha:- Controls model complexity

- Higher

alpha: Simpler model, smaller coefficients. - Lower

alpha: Complex model, larger coefficients.

Regression metrics: MSE, RMSE, MAPE

- Mean Squared Error (MSE): Average of the squares of the errors.

- Root Mean Squared Error (RMSE): Square root of MSE, same units as the target variable.

- Mean Absolute Percentage Error (MAPE): Average of the absolute percentage errors.

Applying log transformation to the targets

- Suitable when the target has a wide range and spans several orders of magnitude

- Example: counts data such as social media likes or price data

- Helps manage skewed data, making patterns more apparent and regression models more effective.

TransformedTargetRegressor- Wraps a regression model and applies a transformation to the target values.

Midterm review

What is machine learning

- ML uses data to build models that identify patterns, make predictions, or generate content.

- It enables computers to learn from data.

- No single model is suitable for all situations.

When is ML suitable?

- ML excels when the problem involve identifying complex patterns or relationships in large datasets that are difficult for humans to discern manually.

- Rule-based systems are suitable where clear and deterministic rules can be defined. Good for structured decision making.

- Human experts are good with problems which require deep contextual understanding, ethical judgment, creative input, or emotional intelligence.

Terminology

- Features (

X) and target (y) - Examples

- Predictions

- Accuracy, error

- Parameters and hyperparameters

- Decision boundaries

ML fundamentals

Important concepts

- What is a baseline? Why do we use them?

- Why do we split the data? What are train/valid/test splits?

- What are the benefits of cross-validation?

- What is underfitting and overfitting?

- What’s the fundamental trade-off in supervised machine learning?

- What is the golden rule of machine learning?

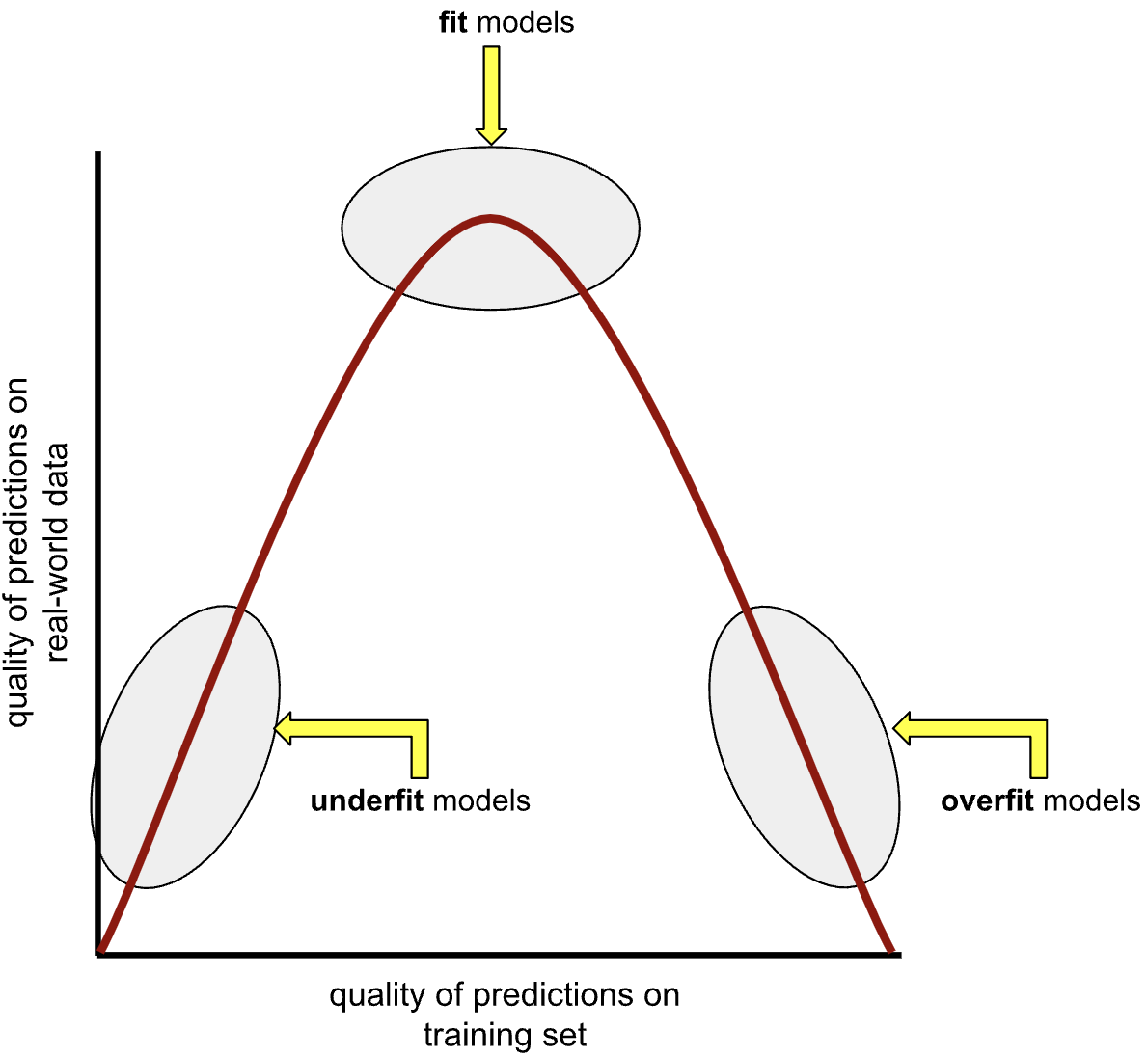

Overfitting and underfitting

- An overfit model matches the training set so closely that it fails to make correct predictions on new unseen data.

- An underfit model is too simple and does not even make good predictions on the training data

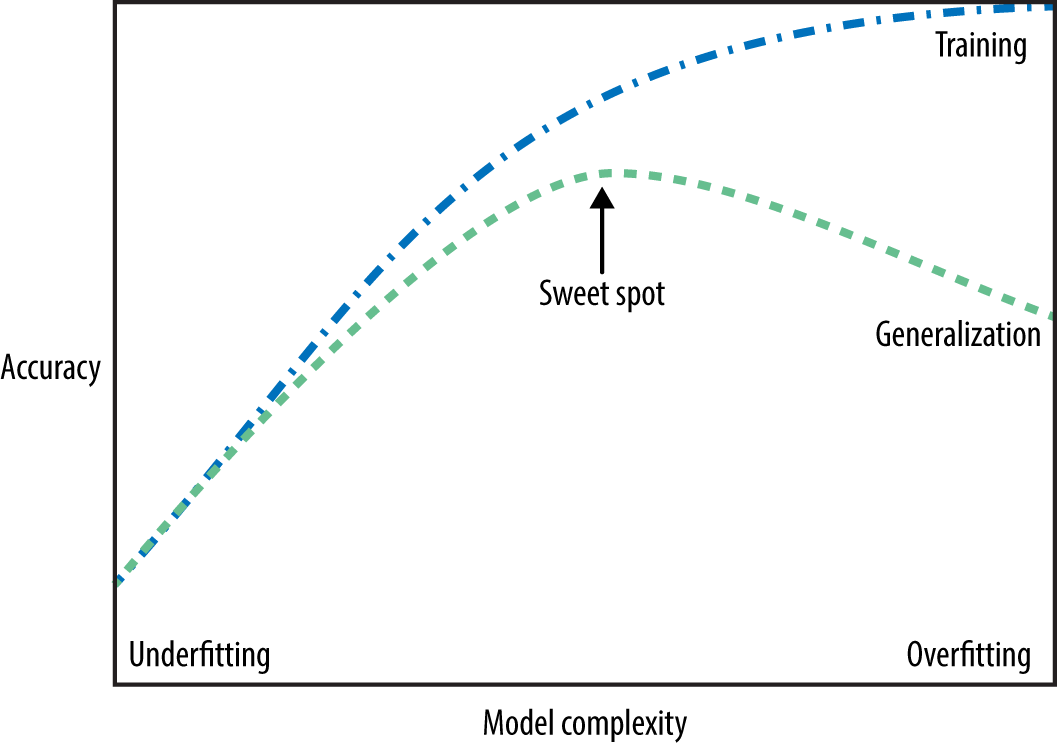

The fundamental tradeoff

- As you increase the model complexity, training score tends to go up and the gap between train and validation scores tends to go up.

- How to pick a model?

Models

Supervised models we have seen

- Decision trees: Split data into subsets based on feature values to create decision rules

- k-NNs: Classify based on the majority vote from k nearest neighbors

- SVM RBFs: Create a boundary using an RBF kernel to separate classes

- Linear models: Assumption that the relationship between

Xandyis linear

Comparison of models

| Model | Parameters and hyperparameters | Strengths | Weaknesses |

|---|---|---|---|

| Decision Trees | |||

| KNNs | |||

| SVM RBF | |||

| Linear models |

Transformers

sklearn Transformers

| Transformer | Hyperparameters | When to use? |

|---|---|---|

SimpleImputer |

||

StandardScaler |

||

OneHotEncoder |

||

OrdinalEncoder |

||

CountVectorizer |

||

TransformedTargetRegressor |

Data leakage

Features

- Using features that are directly related to the target can cause data leakage.

- Example: If you’re building a model to predict the churn rate of customers in a subscription service (churned or not churned) and you are using a feature like “account deactivation date”.

- Example: If you’re building a model to predict the churn rate of customers in a subscription service (churned or not churned) and you are using a feature like “account deactivation date”.

- If a feature essentially gives away the answer, the model might perform exceptionally well during training but fail to generalize to new, unseen data.

Preprocessing

- Incorporating information from the validation or test data during the preprocessing phase (e.g., scaling based on the distribution of the entire dataset instead of just the training set) can also lead to leakage. This can happen if the transformations applied to the training data are influenced by the whole dataset, thus indirectly feeding information about the test set into the model during training.

Preprocessing to avoid data leakage

Pipelines

- Pipeline is a way to chain multiple steps (e.g., preprocessing + model fitting) into a single workflow.

- Simplify the code and improves readability.

- Reduce the risk of data leakage by ensuring proper transformation of the training and test sets.

- Automatically apply transformations in sequence.

Column Transformers

- In what scenarios do we use column transformers?

- Different transformations for different types of columns (e.g., scaling numerical data, encoding categorical data).

- Handle datasets with heterogeneous data types effectively.

Linear models

- How prediction is done

- Coefficient interpretation

- Impact of scaling

- Effect of hyperparameters

- Advantages of getting probability scores instead of hard predictions during classification (Logistic)

Hyperparameter Optimization

| Method | Strengths/Weaknesses | When to use? |

|---|---|---|

| Nested for loops | ||

| Grid search | ||

| Random search |

Classification Metrics

- Confusion matrix

- FP, FN, TP, TN

| Metric | How to generate/calculate? | When to use? |

|---|---|---|

| Accuracy | ||

| Precision | ||

| Recall | ||

| F1-score | ||

| AP score | ||

| AUC |

Regression Metrics

| Metric | How to generate/calculate? | When to use? |

|---|---|---|

| MSE | ||

| RMSE | ||

| r2 score | ||

| MAPE |